Tornadoes are unpredictable. Unlike other storms, where we may have a few days to prepare and evacuate, a tornado's exact point of formation and its path are very difficult—if not impossible—to predict. After the storm, communities must confront a sense of confusion. What happened? Where did the tornado hit? Is everyone okay?

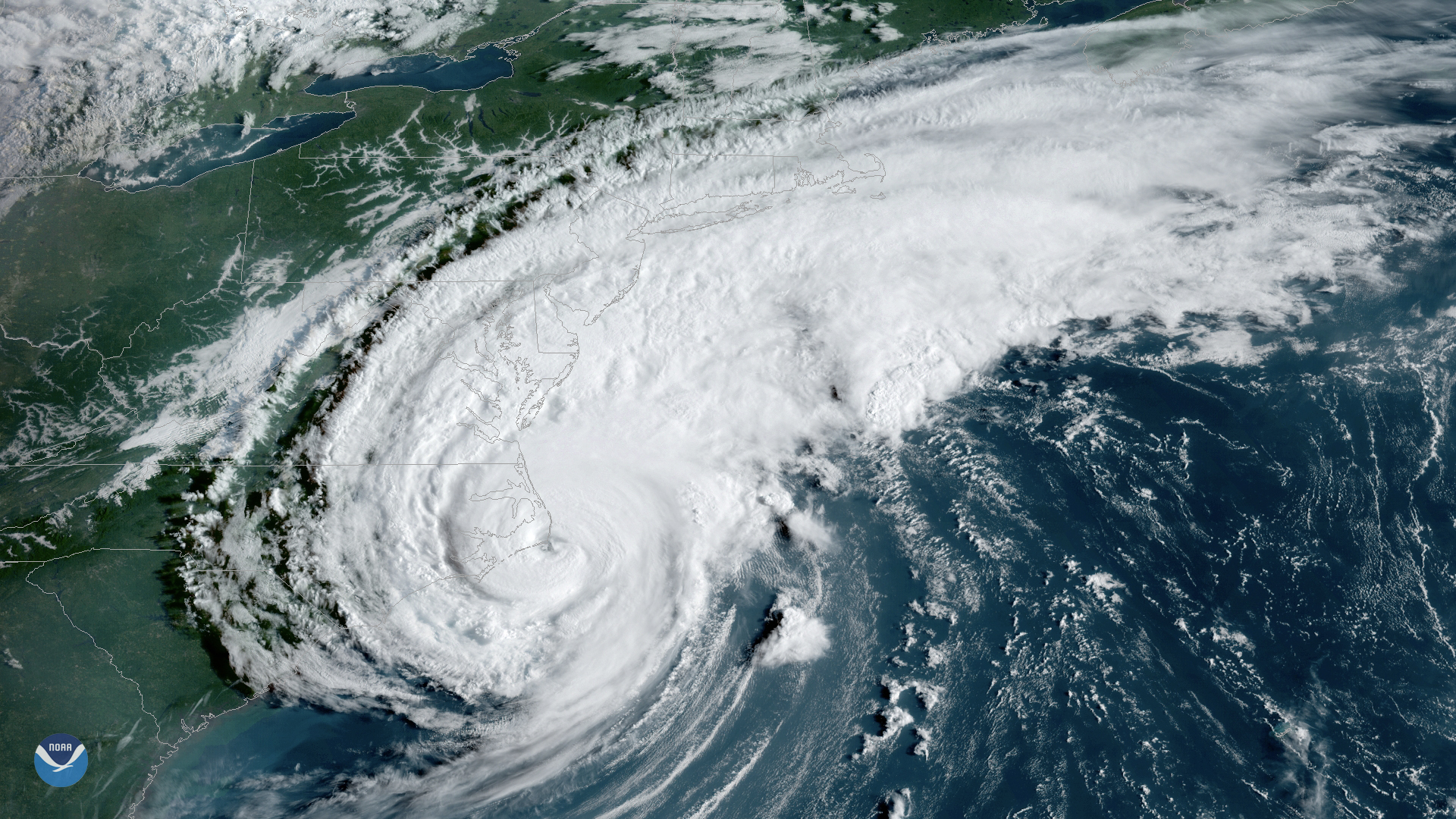

At CrowdAI, we’re a mission-driven team committed to using our technology to help others. As soon as the National Oceanographic and Atmospheric Administration (NOAA) released its post-storm aerial imagery, we got down to the serious work of post-disaster damage assessment. The imagery was captured from aircraft on March 7, four days after the last confirmed tornado.

Unlike our previous work after hurricanes and flooding on the East Coast, we’d never built a deep learning model to understand tornado damage. While wind damage from a hurricane or a tornado may seem similar, they leave strikingly different damage—especially when viewed from above. We wondered how we could quickly re-purpose any of our existing deep learning models for NOAA imagery to expedite the process of imagery review and get data into the hands of those who need it.

Thankfully, we were able to use our existing building detector for NOAA imagery. We ran all of the imagery near areas of confirmed tornado touchdown (found from local news reports and the National Weather Service) through our building detection model to get pinpoints on 90%+ of built structures (this includes things like sheds, barns, and stand-alone garages). Unfortunately, if a building had been destroyed, the model did not detect a structure, so we know we had some work to do.

Without an existing model to classify the damage to each detected structure, the CrowdAI team went “all hands on deck” and jumped straight into human review. We fed image chips into our platform’s annotation interface with the corresponding machine-generated detections. This meant all we had to do as human reviewers was (1) categorize the damage done to structures that the model had already detected and (2) identify any structures that were completely destroyed.

Categorizing damage to structures

Once our model had detected all of the structures in the relevant imagery, we fed those image chips into the image annotation module of our platform so that members of our team could quickly and easily categorize the damage done to each building. For ease, we used the building damage definitions we helped create for the xView2 challenge in 2018.

Since the most important datapoint about a building is its level of damage, and not its specific shape or footprint, we categorized the pinpoints (shown above as colorized dots) associated with each structure. These pinpoints also provide the geolocation of each building.

Identifying destroyed structures

As our team went through the process of categorizing the damage done to structures detected by our model, we were on the lookout for those that had been completely destroyed by the storm. Because they don’t look like a regular building, our building detector didn’t identify them as such. But to a pair of human eyes, we can use context clues to figure out where a building existed before the storm.

For instance, in the image below, the red dot shows what was a building before the storm. The debris pile is consistent with what we saw for other structures destroyed by a tornado, and visual comparison to Google Maps confirmed it to be the case. Now that we have examples of what a “building destroyed by wind damage” looks like, we can actually start to train a model that can account for this situation going forward.

Result

At the end of this 2-day sprint, we had built a dataset of pinpoints for every structure in the vicinity of the tornadoes, classified by level of damage. We shared this data with our U.S. government partners, as well as with NGOs helping people on the ground.

Going forward, we now have a dataset that can be used to begin training a model more accustomed to the specific types of damage caused by tornadoes. The next time a storm like this hits, the AI will be able to do the work, getting this crucial data out even faster than before.

Special thanks to NOAA for the open-source imagery used for this project.